Proof of R2FS! Performance Claims

For the (rightfully) skeptics among you...

As a follow-up to yesterday's post announcing the upcoming release of Syncplify R2FS!, a reverse-remote storage engine for Syncplify Server!, we'd like to provide more context to our performance claims.

We understand that users familiar with "traditional network shares" (e.g., SMB/CIFS) may have concerns about the performance gap between theoretical network throughput and measured NAS performance. It's not uncommon to observe significant performance drops, especially under heavy workloads.

Moreover, it's well-known that adding security often comes at a performance cost. R2FS! is undoubtedly highly secure, featuring mTLS (mutual TLS verification) and TLS 1.3 grade-A encryption cipher suites. So, can R2FS! truly deliver the performance we claim?

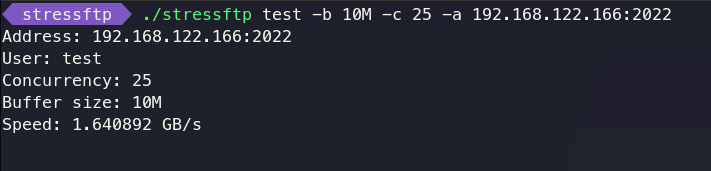

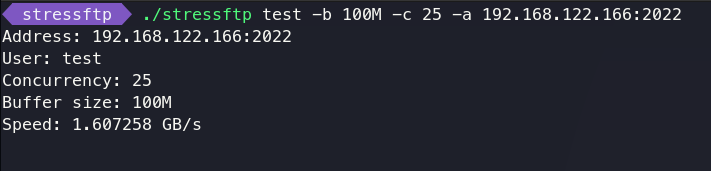

To address these concerns, we developed a lightweight command-line SFTP stress-test tool and ran it against Syncplify Server! V7 (current beta) backed by R2FS! (also current beta). Below you can see the results of our tests.

For context, the maximum throughput of a 10 Gigabit Ethernet (10 GbE) network is approximately 1.164 GiB/s (uncompressed data). However, R2FS! includes built-in, adaptive, ultra-fast, memory-efficient, streaming compression, which could potentially exceed this network limit.

Test Results

Let’s start with an average scenario: 25 concurrent clients, each uploading then downloading a 10 MiB file:

But what if the same 25 clients were transferring much bigger files? Say, a 100 MiB file for each client…

Or, what if we had 100 concurrent clients transferring a 10 MiB file each?

In all three tests, Syncplify Server! backed by R2FS! achieved an average sustained performance of ~1.6 GiB/s (~13.7 Gb/s) , surpassing the network's theoretical uncompressed limit as expected.